Why Is Integer Factorization Hard?

In short, currently no one knows for sure whether factorizing integers is hard or easy. In fact, proving either results seems to be a very hard problem, too. In this post rather than getting into arcane technical or academic details, we will just try to show how a certain straightforward approach to solve factorization fails at this time.

In the previous post we showed that the solitaire game we introduced here is as hard as integer factorization. We also showed how to create hard to solve instances for the game: given a hard-to-factorize number such as RSA-2048, we simply create a corresponding initial position from this number's binary representation. Solving this game instance will also solve the corresponding factorization problem. Computer scientists have a name for this procedure of reducing a given problem's instances to instances of another known problem: polynomial time reduction. What about trying to reduce integer factorization to a simpler to understand problem, say one that doesn't have to deal with prime numbers nor deep mathematical concepts?

Let's focus on the number-to-be-factorized bits

How could we express the problem "find two numbers A and B so that A x B = 77" in simpler terms? We could start from the first principles and look again what this means if we write these numbers in binary. Let A = a3a2a1a0 and let B = b3b2b1b0. Recalling that 77 is 10011012 in binary, you can see in Figure 1 how the the multiplication method in base 2 unfolds.

a3a2a1a0

x b3b2b1b0

--------

a3a2a1a0

x3x2x1x0

y3y2y1y0

z3z2z1z0

-------------

1 0 0 1 1 0 1

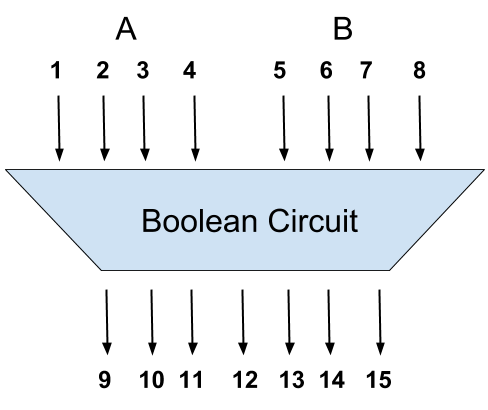

If we take a step back we could see the above expression as a "circuit": we have 8 input bits and 7 output bits and a "black box" performing the multiplication. For simplicity we'll label a0 with 1, a1 with 2, ..., b2 with 7, b3 with 8 etc., as shown in the following image.

The input and output bits can take only two values: 1 or 0 (equivalent to true / false). Whenever we know the value of a bit X we'll use the following notation: if X is 0 we write -X, otherwise we write +X (or omit the + sign). For example, if A = a3a2a1a0 = (4321) = 0101 and B = b3b2b1b0 = (8765) = 1101 we write (1, -2, 3, -4, 5, -6, 7, 8).

The problem of factorizing the number 77 = (15 14 ... 9) = 10011012 is then: find values for the bits 1, 2, ... ,8 so that (15, -14, -13, 12, 11, -10, 9) AND (4321) x (8765) = (15 14 ... 9). There are only two things left to be done: write down (4321) x (8765) = (15 14 ... 9) in a simpler form and find some general way to solve such problems involving Boolean circuits.

The Integer Multiplication Circuit

If we look carefully on how the integer multiplication is expressed in Figure 1, we observe that we need to find a way to convey in a common language facts like the following ones: a bit is the multiplication of two bits: x0 = a0 x b1 , a bit is the sum of two bits: a1 + x0 = u0 , a bit is the sum of 3 bits: a2 + x1 + carry(a1 + x0) = v0 , etc., where +, x are the addition and multiplication modulo 2 (equivalent to the XOR and AND Boolean functions), carry(x,y) is 1 only if both x, y are 1, etc.

One standard way to express the above bit-level equalities is as a list of facts that we expect all of them to be true; the items in this list are called clauses. Each clause is a list of bit level facts that we expect that at least some of them are true; these bit level facts are called literals. Each literal simply expresses whether a certain bit value X should be used in its clause as it is or by taking its complement / negated value not(X) = 1 - X; we are writing the (positive) literal as X and the negated one as -X. As done previously, we'll label bits with positive numbers. Henceforth we'll use the number 0 to mark clearly when a clause is ending.

For example, the fact that the bit 7 is the binary multiplication of bits 3 and 5 is written as a list of three clauses as follows.

7 -3 -5 0

-7 3 0

-7 5 0

Without further ado, here is some Java code that will output clauses for our use cases:

// Returns the complement of var.

int not(int var) {

return -var;

}

// Outputs x = a AND b in clausal form.

void and(int x, int a, int b) {

out(x + " " + not(a) + " " + not(b) + " 0");

out(not(x) + " " + a + " 0");

out(not(x) + " " + b + " 0");

}

// Outputs x = carry(a, b) in clausal form.

void carry(int x, int a, int b) {

out(x + " " + not(a) + " " + not(b) + " 0");

out(not(x) + " " + a + " 0");

out(not(x) + " " + b + " 0");

}

// Outputs x = a + b + c in clausal form.

void xor(int x, int a, int b, int c) {

out(x + " " + not(a) + " " + b + " " + c + " 0");

out(x + " " + a + " " + not(b) + " " + c + " 0");

out(x + " " + a + " " + b + " " + not(c) + " 0");

out(x + " " + not(a) + " " + not(b) + " " + not(c) + " 0");

out(not(x) + " " + not(a) + " " + not(b) + " " + c + " 0");

out(not(x) + " " + not(a) + " " + b + " " + not(c) + " 0");

out(not(x) + " " + a + " " + not(b) + " " + not(c) + " 0");

out(not(x) + " " + a + " " + b + " " + c + " 0");

}

A Java Command Line Tool: IntFact2Sat.java

What we showed so far is that there is in theory a way to reduce any integer factorization instance to a list of Boolean clauses, also known as a Boolean formula in clausal form. These are actually instances of the Satisfying Assignment Search Problem (in short, SAT) that it is know to be an NP complete problem.

You can actually

see here a command line Java program that generates

such instances. (You can also download this ~200 lines Java program here.)

For example, if you run this command tool without any arguments, you get the instance corresponding to

factorizing the number 9:

$java com.crasmaru.sat.IntFact2Sat

p cnf 11 33

c 3: 1 2

c 3: 1 2

6 -1 -4 0

-6 1 0

-6 4 0

7 -2 6 0

7 2 -6 0

-7 2 6 0

-7 -2 -6 0

8 -2 -6 0

-8 2 0

-8 6 0

9 -2 -4 0

-9 2 0

-9 4 0

10 -5 9 8 0

10 5 -9 8 0

10 5 9 -8 0

10 -5 -9 -8 0

-10 -5 -9 8 0

-10 -5 9 -8 0

-10 5 -9 -8 0

-10 5 9 8 0

11 -5 -9 0

11 -5 -8 0

11 -9 -8 0

-11 5 9 0

-11 5 8 0

-11 9 8 0

3 0

-5 0

1 0

-7 0

-10 0

11 0

Note that the line "p cnf 11 33" means that we have a problem in the CNF format with 11 variables and 33 clauses. The lines "c 3: 1 2" describe the solution: 9 = 3 x 3 and 3's corresponding bits are 1, 2 etc.

Using a SAT solver: Z3

Now that we can reduce integer factorization problems to Boolean formulas in clausal form, we could try to use some off-the-shelf SAT solvers. Such a solver is Z3, a state-of-the-art theorem prover from Microsoft Research. Downloading, building and installing Z3 is straightforward. Let's try using it on the simplest problem we have: we save the instance corresponding to factorizing the number 9 in the file "sat2.dim" and then run Z3 on it.

$java com.crasmaru.sat.IntFact2Sat > sat2.dim

$z3 -dimacs sat2.dim -st

sat

model validated

1 2 3 4 -5 6 -7 8 9 -10 11

:total-time 0.00

The Z3 solver first reads the "sat2.dim" file and then, by using some advanced algorithms, tries to find an assignment to the 11 variables so that all 33 clauses are true. The message "model validated" means that Z3 found a solution and the following line is the assignment to the 11 variables. As we know that the 1 and 2 variables are the bits of first factor, that is, 9 = (21) x (43), we can recover the factor in binary: 112 = 3, as expected.

Let's try the harder problem of factoring the 490801757 number (29 bits), and see how is the solving time increasing with respect to the problem size.

$java com.crasmaru.sat.IntFact2Sat 15 > sat15.dim

$head -3 sat15.dim

p cnf 661 3504

c 20297: 1 -2 -3 4 -5 -6 7 -8 9 10 11 12 -13 -14 15

c 24181: 1 -2 3 -4 5 6 7 -8 -9 10 11 12 13 -14 15

$z3 -dimacs sat15.dim -st

sat

model validated

1 -2 3 -4 5 6 7 -8 -9 10 11 12 13 -14 15 16 -17 -18 19 -20 -21 22 -23 24 25 26 27 -28 -29 30 ...

:total-time 0.14

The Z3 solver found a solution in 0.14 seconds. Note that Z3 found 1 -2 3 -4 5 6 7 -8 -9 10 11 12 13 -14 15 as the assignment to the first 15 bits, matching our expected solution (c 24181: 1 -2 3 -4 5 6 7 -8 -9 10 11 12 13 -14 15).

Let's increase the factors size to 20:

$java com.crasmaru.sat.IntFact2Sat 20 > sat20.dim

$head -3 sat20.dim

p cnf 1181 6369

c 941383: 1 2 3 -4 -5 -6 7 -8 9 -10 11 12 13 -14 15 -16 -17 18 19 20

c 557857: 1 -2 -3 -4 -5 6 -7 -8 9 10 -11 -12 -13 -14 -15 16 -17 -18 -19 20

$z3 -dimacs sat20.dim -st

sat

model validated

1 2 3 -4 -5 -6 7 -8 9 -10 11 12 13 -14 15 -16 -17 18 19 20 ...

:total-time 4.07

Z3 factorized the number 525157096231 in 4 seconds. Next challenge: factorize 608987153487677, a 50 bits number.

$java com.crasmaru.sat.IntFact2Sat 25 > sat25.dim

$head -3 sat25.dim

p cnf 1851 10084

c 24354511: 1 2 3 4 -5 -6 7 8 -9 10 11 12 13 -14 -15 16 17 18 -19 -20 21 22 23 -24 25

c 25005107: 1 2 -3 -4 5 6 -7 -8 -9 -10 11 12 -13 -14 -15 16 17 -18 19 20 21 22 23 -24 25

$z3 -dimacs sat25.dim -st

sat

model validated

1 2 -3 -4 5 6 -7 -8 -9 -10 11 12 -13 -14 -15 16 17 -18 19 20 21 22 23 -24 25 ...

:total-time 316.93

It should be clear that while the number of variables is growing at a O(n2) rate with respect to the input length (number of bits), the time Z3 needs to find a solution is growing at a much faster rate. In fact, factorizing 100 bits numbers using the Z3 software might be pretty much impossible, it might take years.

People tried to solve integer factorization instances by using much more powerful tools, for example General Number Field sieve algorithms. What they found out is that all these algorithms are running exponentially longer w.r.t. their input length. And this is the reason why the majority of people believe that integer factorization is a hard problem.

Some Last Notes

To recapitulate: because all integer factorization algorithms that researchers tried so far seem to run

in exponential time w.r.t. input length, the current belief is that this problem

is a hard to solve one. However, while this problem is in NP, it is suspected that it is not NP-hard.

This problem is famous for mainly two reasons:

1. If you can factorize big numbers in reasonable time then you can break the

RSA encryption algorithm.

2. There is an algorithm that factorizes integers in polynomial time:

Shor's algorithm. The catch

is that it runs on a quantum computer.

Integer factorization is one of the many tools used in the attempt to prove the P vs NP problem. We don't know how to generate hard in average SAT instances, however Integer Factorization seems to be hard on average. Therefore the SAT instances built from integer factorization instances should be hard-on-average. There are many studies and online software trying to generate such SAT instances, see for example this paper, or this site, or this another one.

In this post we showed how such a reduction is done and also provided a very short and easy to read Java implementation. In the following we'll also show how to generate SAT instances that are as hard as breaking the DES encryption or the Bitcoin mining protocol.